The k-means clustering algorithm is one of the simplest unsupervised machine learning algorithms, which can be used to automatically recognise groups of similar points in data without any human intervention or training.

The first step is to represent the data you want to group as points in an n-dimensional space, where n is the number of attributes the data have. For simplicity let’s assume we just want to group the ages of visitors to a website – a one-dimensional space. Let’s assume the set of ages is as follows:

{15,15,16,19,19,20,20,21,22,28,35,40,41,42,43,44,60,61,65}

Now there are a number of ways we could separate these points out; one obvious way that springs to mind is simply to iterate over the set and find the largest gap between adjacent ages. For some sets this can work quite nicely, but in our case it would give us quite unbalanced groups; 15-44 and 60-65, the latter containing just 3 points, and the former being far too broadly distributed.

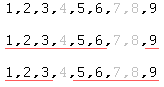

Using k-means clustering we can obtain more tightly defined groups; consider the set {1,2,3,5,6,9} – using the simplistic greatest distance technique we gain two sets {1,2,3,5,6} and {9}, while the k-means algorithm produces a grouping of {1,2,3} and {5,6,9} – more cohesive clusters with less dispersion of points inside the groups – k-means tries to minimise the sum of squares within a cluster:

Notice how in the first set of clusters the outermost points of the first cluster are quite far away from the middle of the cluster, while in the second set, the points in both clusters are closer the center of their groups; making these clusters more well-defined, less sparsely populated.

Now let’s have a look at the algorithm and work through the example age data to see if we can get a tighter grouping than 15-44 and 60-65 using clustering:

The algorithm:

Firstly we pick k random points in the space as initial cluster co-ordinates, where k=the number of clusters you wish to obtain.

Then for each cluster, we work out the average value for that cluster, known as the centroid of the cluster.

Then we iterate over all the points, including those already assigned to clusters, and we assign each point to the cluster with the closest centroid.

We then repeat the process of finding the centroids and assigning points until there is no change between iterations.

Example:

Let’s say we want two clusters, and initially set cluster one = 16 and cluster two=22. In full, the process of clustering our example data looks like this:

Initial Clusters:

centroid 16 Â Â [16]

centroid 22Â Â Â [22]

Now assign all points closer to 16 than 22 to cluster one:

Iteration 1 clusters:

centroid 15.33Â Â Â [15,15,16]

centroid 36.25Â Â Â [19,19,20,20,21,22,28,35,40,41,42,43,44,60,61,65]

Now assign all points closer to 15.33 than 36.25 to cluster one:

Iteration 2 clusters:

centroid 18.56Â Â Â [15,15,16,19,19,20,20,21,22]

centroid 45.90Â Â Â [28,35,40,41,42,43,44,60,61,65]

Now assign all points closer to 18.56 than 45.90 to cluster one:

Iteration 3 clusters:

centroid 19.50Â Â Â [15,15,16,19,19,20,20,21,22,28]

centroid 47.89Â Â Â [35,40,41,42,43,44,60,61,65]

Now assign all points closer to 19.50 than 47.89 to cluster one:

Iteration 4 clusters:

centroid 19.50Â Â Â [15,15,16,19,19,20,20,21,22,28]

centroid 47.89Â Â Â [35,40,41,42,43,44,60,61,65]

No change between iterations; this is known as convergence and means we can stop running the algorithm. Using clustering, we have gained 15-28 and 35-65, which are much more closely grouped clusters than 15-44 and 60-65.

The initial choice of centroids can affect the output clusters, so the algorithm is often run multiple times with different starting conditions in order to get a fair view of what the clusters should be.

In a multi-dimensional space, we define distance as the Euclidean distance, e.g. if we imagine a 3-d space (your office or bedroom) and two points in it (the light switch and the light bulb), then the distance between the two is simply the shortest straight line between the points. The Euclidean distance gives us this measure.

Next time, I’ll present PHP and javascript implementations of the k-means clustering algorithm for n dimensions so you can see how it works in the code, and have a chance to use clustering in your own applications. Any questions or comments? Chat below!

#1 by Happy User on March 18, 2010 - 9:48 pm

Great writeup!

#2 by Howard Yeend on March 19, 2010 - 12:13 am

:D Thanks!

#3 by hrushi on September 22, 2010 - 1:33 am

i want kmeans clustering for n dimensions with large ste of data

#4 by John Syrinek on July 12, 2011 - 6:53 am

I just wrote a quick k-cluster algorithm in PHP for demo purposes: http://johntron.com/programming/k-cluster-algorithm-in-php/

#5 by bcmoney on September 16, 2011 - 6:49 pm

Great info, I don’t suppose you have a link to your JS or PHP implementations of the K-Means Clustering algorithm?

#6 by anichkuwar on December 7, 2011 - 7:10 pm

hey..great post..

but i was just wondering..if we were given around 20,000 articles..and we need to cluster them acc to their category say music,movies,news,etc,. (obviously two intersecting cluster can be used for recommendation)..but how would i do that ??

i am not able to start code..as soon as i start coding, i again end up reading algorithms..

so can you help me in this ??

One more doubt don’t you think this approach would be complex in real time scenario and will have higher computation time ??

#7 by themindflow.eu on December 30, 2011 - 11:04 am

#6: google for “document classification”. It is most often done with supervised learning algorithms, namely Naive Bayes. Dont worry, it is almost as easy to grasp as k-means .

#8 by tor on April 29, 2012 - 8:46 am

One optimization for large sets is to produce k means for only a small, randomly selected subset of the points and then fit the remaining data to these groups. The result is just about the same.

However, I would love to see a similar algorithm that could pick k on its own. If only a small range for k is possible or probable, one can of course compute k means for several k and choose the best oneself (according to some preference), but if the k can have a wide range of values, this is very expensive. Anyone heard of such an algorithm?